Optical Character Recognition

An investigation into custom and built-in machine learning algorithms for optical character recognition (OCR) on the EMNIST hand-drawn character dataset. This is done using MATLAB code. This experiment compares a custom K-Nearest Neighbour algorithm with two varying distance measures against the built-in: K-Nearest Neighbour and SVM Multiclass from MATLAB.

Introduction:

Optical Character Recognition is the process of converting handwritten text into machine-encoded text through mediums such as documents, written papers, or images. Developing automated methods for this using machine learning and deep learning of large datasets is important for producing AI models that can appropriately recognise and convert this text. In this work, we aim to compare varying classification models for their efficiency and accuracy in creating an AI model for Optical Character Recognition. This has many uses such as data entry in transcribing written records into a digital form.

The EMNIST dataset is a collection of 26,000 images. The format of this data is 26,000 28x28 pixel images with each pixel represented by a numerical value. Each image is also provided with a corresponding label as to the correct digital character that the handwritten image refers to. Data is provided as a 1x784 vector and variable labels stored as a column vector. We convert the images into a double type so that it can be scaled accurately unlike an integer. We also convert the labels into a categorical array for further processing. The data is split into training and testing subsets to avoid overfitting. A random permutation of the 26,000 images is created and used to make a 50/50 split of training and testing subsets.

Methodology:

In this study we compare various classification models and distance measures to classify the test data. We implement the K-nearest neighbour classification model. This model measures the distance from our test point to the nearest points. It then looks at the k-nearest of these and sets the value of the test point to the most common labelled point nearby. We use the k-value of 28 as a compromise for efficiency and accuracy. In this method we compare the impact of using Euclidean distance against using L1 or Manhattan distance. We then also compare two models from MatLab using pre-defined functions for K-nearest neighbour and SVM for Multiclass. SVM for Multiclass works by splitting data into linearly separated groups.

Code Overview:

This section presents specific parts of the MATLAB code with further annotation and description. Important parts include:

Custom K-Nearest Neighbour algorithms.

Confusion Matrix Evaluation.

Main.m - Custom KNN Snippet% Custom KNN prediction1 = categorical.empty(size(tefeatures, 1), 0); prediction2 = categorical.empty(size(tefeatures, 1), 0); k = 28; for i= 1:size(tefeatures, 1) comp1 = trfeatures; comp2 = repmat(tefeatures(i, :), [size(trfeatures, 1), 1]); l2 = sum((comp1-comp2).^2, 2); [~, ind] = sort(l2); ind = ind(1:k); labs = trlabels(ind); prediction1(i, 1) = mode(labs); i end for i = 1:size(tefeatures, 1) comp1 = trfeatures; comp2 = repmat(tefeatures(i, :), [size(trfeatures, 1), 1]); l1 = sum(abs(comp1-comp2), 2); [~, ind] = sort(l1); ind = ind(1: k); labs = trlabels(ind); prediction2(i, 1) = mode(labs); i end % Built-in Algorithms knnmodel = fitckn(trfeatures, trlabels); predictedknn = predict(knnmodel, tefeatures); svmmodel = fitcecoc(trfeatures, trlabels); predictedsvm = predict(svmmodel, tefeatures);

We create two empty categorical arrays for our prediction output for the custom KNN models. We then set our K

parameter for K-NN which determines how many nearby points are compared. Then for each model we compare our testing

features and training features and use our distance model to index the nearest points. The testing point is then compared

to the nearest k training points and a prediction class is made and stored in our initial categorical array.

This is then done for all 13,000 values and for each custom KNN method.

We then use the fitckn() and predict() methods to use the built-in KNN Matlab model and the fitcecoc() and predict()

methods to use the built-in SVM Matlab model

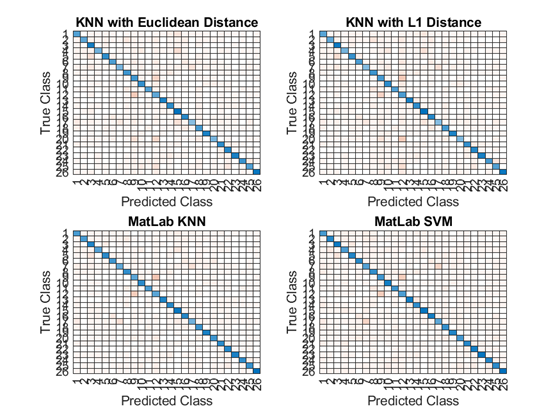

Main.m - Confusion Matrix Snippet% Confusion Matrix figure(2) subplot(2, 2, 1); knn1CM = confusionchart(telabels, prediction1); title("KNN with Euclidean Distance"); subplot(2, 2, 2); knn2CM = confusionchart(telabels, predction2); title("KNN with Manhattan Distance"); subplot(2, 2, 3); knnmodelCM = confusionchart(telabels, predictedknn); title("MatLab KNN"); subplot(2, 2, 4); svmmodelCM = confusionchart(telabels, predictedsvm); title("Matlab SVM"); fname = './Results'; if ~exist(fname, 'dir') mkdir(fname); disp(['Folder "', fname, '"created.']); else disp(['Folder "', fname, '" already exists.']); end saveas(gcf, fullfile(fname, "Confusion.png"));

This code creates a new figure with 2 rows and 2 columns. For each position within this structure we map a confusion chart between the testing data labels and our predicted labels to compare and see which are correct. We then title them to be able to read our data. Once this is done we check to see if the 'Results' directory exists and if so save the file there. If not we create this directory and save the file there.

Results:

| Classification Model | Time of Processing (s) | Correct Predictions (/13k) | Accuracy (%) |

|---|---|---|---|

| KNN Custom Euclidean | 424 | 9,496 | 73.05 |

| KNN Custom Manhattan | 423 | 9,202 | 70.78 |

| KNN Matlab | 21.5 | 10,096 | 77.66 |

| SVM Matlab | 41.3 | 9,518 | 73.22 |

The Custom KNN model is far slower to process than the built-in functions which is to be expected as pre-built functions are optimised in machine code already without the need for compilation or interpretation. The distance measures have very similar time of processing. Manhattan is quicker as it doesn’t need calculating of square numbers however this is a large dataset, and this advantage doesn’t apply. The MatLab KNN is ~20 times quicker and has a greater accuracy making it more efficient and better. SVM has a slower processing time as it classifies the entire data which takes a lot of time on the dataset. This is also not rewarded as the accuracy is less. SVM is often used for text-processing and is efficient on small datasets, so this observation is expected on a large dataset.

In conclusion, our study shows that the K-nearest neighbour classification model is both the most efficient and accurate algorithm for correctly classifying these handwritten images. KNN is very good for implementing on multiclass models however it can be slow on large datasets and struggle with imbalanced data. An accuracy of 77.66% is not good enough for making this OCR model viable in commercial use and so further testing and training of other models is important for increasing these numbers. Further evaluation could also be done to recognise areas of weakness. For example, looking at the confusion charts we see the models have issues with distinguishing between the letter I and L.

Data and Charts:

Reading the Data: Here we can see the confusion charts of the four models. The x and y axis are the Predicted Class and True Class

respectively. The blue diagonal line we can see indicates our successful matches of characters where our model

correctly predicted a hand-drawn letter and matched it to the correct class.

The other boxes also indicate matches but these are incorrect matches. The higher the gradient of orange means the more

incorrect matches. We can see these in places we might expect. For example with the KNN with Euclidean Distance model

we see True 12 is predicted as class 9. In real terms the model predicts that the letter 'l' is the letter 'i' and vice

versa.